Attention mechanisms in transformers help you understand how models focus on different parts of input data to make predictions. They assign importance scores to tokens or features, highlighting which elements influence the output most. By visualizing these attention patterns, you can see where the model’s focus lies, revealing relationships within the data. Keep exploring to uncover how these focus patterns improve model performance and interpretability across various applications.

Key Takeaways

- Attention mechanisms enable transformers to weigh input parts, capturing context and relevant features for improved understanding.

- Self-attention calculates importance scores using query, key, and value vectors to focus on relevant input tokens.

- Multi-head attention processes multiple attention operations in parallel, capturing diverse features for richer representations.

- Attention heatmaps visualize focus distribution across input sequences, aiding interpretability and transparency.

- Proper understanding of attention helps reveal model behavior, biases, and dependencies within transformer architectures.

The Basics of Attention in Machine Learning

Attention mechanisms allow models to focus on the most relevant parts of the input data when making predictions. In a neural network, this process helps the model weigh different pieces of information, highlighting what matters most for accurate results. By doing so, attention enhances model interpretability, letting you see which data influences the output. Instead of treating all input features equally, attention assigns importance scores, guiding the model’s focus. This mechanism is particularly useful for complex data, such as language or images, where not all details are equally relevant. As a result, attention allows your neural network to better understand context, improve performance, and provide insights into its decision-making process. It’s a key step toward more transparent and effective AI models. Additionally, understanding attention in the context of content, such as images or text, helps optimize model design for specific applications.

How Transformers Use Attention to Process Data

Transformers utilize a specialized form of attention called self-attention to process data efficiently. This allows the model to weigh different parts of the input sequence dynamically, capturing context and relationships. As you explore this, keep in mind attention ethics, ensuring the model’s focus aligns with fair and unbiased processing. However, attention has limitations; it might overlook subtle details or amplify biases if not monitored carefully. Here’s how transformers use attention:

- They identify relevant information by assigning weights to different input tokens.

- These weights help the model focus on important words or phrases.

- Attention mechanisms enable understanding of context across long sequences.

- Awareness of attention limitations helps prevent over-reliance on certain data points, promoting responsible AI use.

The Mechanics Behind Scaled Dot-Product Attention

To understand scaled dot-product attention, you need to see how attention scores are calculated by taking the dot product of query and key vectors. Then, you apply the softmax function to these scores to determine the importance of each input. This process helps the model focus on relevant information efficiently. Additionally, understanding attention training methods can improve the effectiveness of this mechanism in various applications.

Calculating Attention Scores

Calculating attention scores involves measuring how much focus each input element should receive based on their relevance to one another. To do this, you perform attention calibration by comparing queries and keys through a dot product. Then, you normalize the scores to make them comparable across inputs, guaranteeing proper score normalization. This process helps the model determine which parts of the input are most important. Here’s how it works:

- Compute dot products between queries and keys to get raw scores.

- Scale the scores by dividing by the square root of the key dimension for stability.

- Apply score normalization to convert raw scores into a consistent range.

- Use these scores to assign attention weights, guiding focus during the next steps.

This approach ensures the attention mechanism effectively calibrates focus based on input relevance.

Applying Softmax Function

Once you have computed the raw attention scores, applying the softmax function transforms these scores into a set of normalized weights. This step is vital for attention normalization, ensuring that the weights add up to one, which makes them interpretable as probabilities. Softmax emphasizes the most relevant scores by assigning higher weights, while diminishing less important ones. This probability weighting enables the model to focus on the most relevant parts of the input data. By converting raw scores into probabilities, softmax allows the attention mechanism to selectively emphasize important information, leading to better context understanding. This process guarantees that the attention outputs are both interpretable and effective, guiding the transformer to attend selectively based on the importance of different input tokens. Additionally, understanding AI vulnerabilities helps in developing more robust safety measures for models like GPT-4.

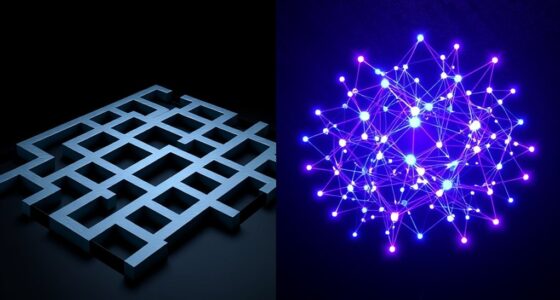

Multi-Head Attention: Enhancing Focus and Context

Multi-head attention allows transformers to focus on different parts of the input simultaneously, improving their ability to understand complex contexts. This setup offers diversity benefits by capturing varied aspects of data, making the model more robust. The multi layer synergy enhances the overall understanding, as each head learns unique information. To grasp this better: 1. Multiple attention heads process data in parallel, increasing focus points. 2. Each head captures distinct features, enriching the context. 3. Combining outputs strengthens the model’s adaptability. 4. This mechanism boosts efficiency by enabling nuanced learning across layers. Additionally, the use of skincare innovations in models can inspire more advanced attention techniques.

The Role of Query, Key, and Value Vectors

In transformers, the core of attention lies in the interplay between query, key, and value vectors. You generate the query to ask what information you need, while the key acts as a label for each piece of data. The value contains the actual information you want to extract. The alignment between query and key vectors determines how well the data matches your request, affecting the attention weights. When the vectors align closely, the attention weight increases, meaning you focus more on that data. This vector alignment allows the model to weigh different inputs dynamically, guiding it to prioritize relevant information. Additionally, pressure relief can be achieved by adjusting the model’s attention mechanisms to prevent overfitting and ensure balanced information processing. Ultimately, these vectors work together to help the transformer selectively attend to important parts of the input, enabling nuanced understanding and contextual processing.

Visualizing Attention: Understanding Model Focus

You can gain insight into a transformer’s focus by examining attention heatmaps, which highlight where the model is concentrating. Visualizing focus areas helps you understand how the model processes information and makes decisions. By interpreting these attention patterns, you can better grasp the inner workings of the model’s decision-making process. Additionally, understanding the role of attention mechanisms in models like transformers is crucial for comprehending how they prioritize different parts of input data.

Attention Heatmaps Explained

Attention heatmaps are powerful tools that allow you to visualize where a transformer model is focusing its attention during processing. They reveal the neural pathways the model activates when analyzing text, helping you understand its decision-making process. By examining heatmaps, you can see which tokens the model considers most important, guided by attention heuristics. This insight helps you interpret the model’s focus and interpret its outputs more effectively. Additionally, heatmaps assist in identifying attention distribution, which is crucial for understanding how the model weighs different parts of input data.

Focus Areas Visualized

Have you ever wondered how transformers decide which parts of a sentence to focus on? Focusing areas can be seen clearly through visualized attention, which highlights where the model is paying the most attention. These visualizations show you the focusing areas across different tokens, revealing the relationships and dependencies within the input. By examining visualized attention, you gain insight into which words influence others, making the model’s decision process more transparent. This approach helps you understand how the transformer distributes its focus throughout the sequence. Seeing these focusing areas in visualized attention maps allows you to better grasp how the model interprets context, emphasizing important words while downplaying less relevant parts. It’s a powerful way to understand model behavior beyond raw numbers or abstract explanations. Additionally, understanding attention mechanisms can shed light on how models handle complex language structures.

Interpreting Model Attention

Visualizing attention maps provides a direct window into how transformer models allocate focus across different parts of a sentence. This helps you understand the attention paradox—why models sometimes focus on unexpected words—or deal with focus ambiguity. To interpret these maps effectively:

- Check which tokens attract the most attention, revealing what the model emphasizes.

- Beware of focus ambiguity, where attention is spread too thin or unclear.

- Look for patterns across layers to see how focus shifts or consolidates.

- Use visualizations to identify potential biases or misalignments in the model’s focus.

- Recognize how decluttering your workspace can metaphorically help clarify the model’s focus, making it easier to interpret attention patterns.

These insights help demystify the model’s decision process, making the complex nature of attention more transparent and highlighting where the attention paradox may cause confusion.

Practical Applications and Impacts of Attention Mechanisms

The integration of attention mechanisms into transformer models has revolutionized many practical applications by considerably enhancing their ability to process and understand complex data. In real-world use cases, attention enables models to focus on relevant information, improving natural language processing, image recognition, and speech translation. This technology has a significant industry impact, streamlining workflows and delivering more accurate results. For example, in healthcare, attention helps analyze medical records and imaging data more effectively. In finance, it improves forecasting and fraud detection. The ability to weigh different parts of input data allows for more nuanced insights, making models smarter and more adaptable. Additionally, attention mechanisms facilitate multi-modal learning, combining data from various sources for comprehensive analysis. Overall, attention mechanisms are transforming how industries handle data, leading to innovative solutions and increased efficiency.

Frequently Asked Questions

How Do Attention Mechanisms Differ Across Various Transformer Architectures?

When you compare attention mechanisms across transformer architectures, you notice differences like multimodal attention, which allows models to focus on multiple data types simultaneously, and sparse attention, which improves efficiency by limiting focus to select parts. These variations enhance performance in specific tasks, making some architectures more suitable for complex multimodal data or large datasets, while others prioritize speed and resource management through sparse attention techniques.

Can Attention Mechanisms Be Applied Outside Natural Language Processing?

Imagine a spotlight shining on different parts of a stage, revealing what’s important. That’s what attention mechanisms do, and they’re not limited to language—they’re used in vision tasks like image recognition. In cross-domain applications, attention helps models focus on relevant features, improving performance. So, yes, attention mechanisms can be applied outside NLP, enhancing fields like computer vision, speech processing, and even bioinformatics.

What Are Common Challenges or Limitations of Attention in Transformers?

You might notice that attention in transformers faces challenges like scalability issues and high computational complexity, especially with large datasets or long sequences. These limitations make training and inference resource-intensive and slow. As the model grows, managing memory and processing power becomes harder. To address this, researchers develop efficient attention mechanisms, but balancing performance and resource demands remains a key challenge in advancing transformer applications.

How Does Attention Impact the Interpretability of Transformer Models?

You might find that attention improves interpretability by highlighting which parts of input data influence predictions. Visualization techniques allow you to see these attention weights clearly, making model behavior more transparent. Using interpretability metrics, you can quantify how well attention aligns with human reasoning. Together, these tools help you understand what the model focuses on, increasing trust and making it easier to diagnose issues or improve performance.

Are There Alternative Methods to Attention for Capturing Context in Neural Networks?

You’re wondering if there are alternative techniques to attention for context modeling in neural networks. Yes, other methods like convolutional layers, recurrence, and memory-augmented models can also capture context effectively. These alternatives process data differently, sometimes offering advantages like better interpretability or efficiency. While attention is powerful, exploring these techniques helps you choose the best approach depending on your model’s needs and specific application.

Conclusion

By understanding attention mechanisms in transformers, you see how models effectively focus on relevant data, improving accuracy. Some might think this complexity limits interpretability, but visualizations show you where the model’s focus lies. Rather than a black box, attention reveals a clear, adaptable process that enhances tasks like language understanding. Embracing this depth helps you appreciate transformer strengths, making your AI applications more transparent and powerful.