Retrieval-Augmented Generation (RAG) combines information retrieval and natural language generation to produce more accurate, relevant responses by incorporating external sources into AI systems. It searches knowledge bases for relevant data, then feeds that information into the generation process, ensuring responses are both factual and contextually rich. RAG helps improve tasks like customer support and research. Keep exploring to discover how this innovative approach is shaping smarter, more reliable AI applications.

Key Takeaways

- Retrieval-Augmented Generation (RAG) combines information retrieval with natural language generation for more accurate responses.

- It uses external data sources to enhance the knowledge base of AI models dynamically.

- RAG integrates retrieved information into the generation process to produce contextually relevant and factual outputs.

- The system consists of retrieval, knowledge integration, and generation components working together.

- RAG improves applications like customer support, research, and content creation by providing up-to-date and precise information.

Understanding the Concept of Retrieval‑Augmented Generation

Retrieval-Augmented Generation (RAG) is a technique that combines the strengths of information retrieval and natural language generation to produce more accurate and informed responses. It works by integrating relevant context from external sources, allowing the system to access up-to-date or specialized knowledge. This process, known as context integration, helps the model understand the specifics of your query better. Additionally, RAG enhances knowledge augmentation by enriching the generated output with accurate information retrieved from large datasets or databases. Instead of relying solely on pre-trained data, RAG actively pulls in relevant details, making responses more precise and contextually appropriate. This approach ensures that your AI-generated content is both well-informed and tailored to the specific question or task at hand. Enhancing decision-making with real-time data access is one of the key advantages of RAG, enabling more dynamic and adaptable AI applications.

How RAG Combines Retrieval and Generation Techniques

RAG systems effectively combine retrieval and generation techniques by seamlessly integrating external information into the language model’s output. This approach enhances contextual understanding, allowing the system to access relevant data beyond its training knowledge. When a user asks a question, the retrieval component searches a knowledge base or document store for pertinent information. This retrieved data is then fed into the generation process, enabling the model to produce more accurate, informed responses. By combining these methods, RAG systems improve knowledge integration, ensuring outputs are both contextually appropriate and factually grounded. This synergy allows the model to adapt dynamically to new information, providing responses that are richer and more reliable than those generated solely from internal training data. Maximize Space and Organization techniques can further improve the efficiency of information retrieval and presentation.

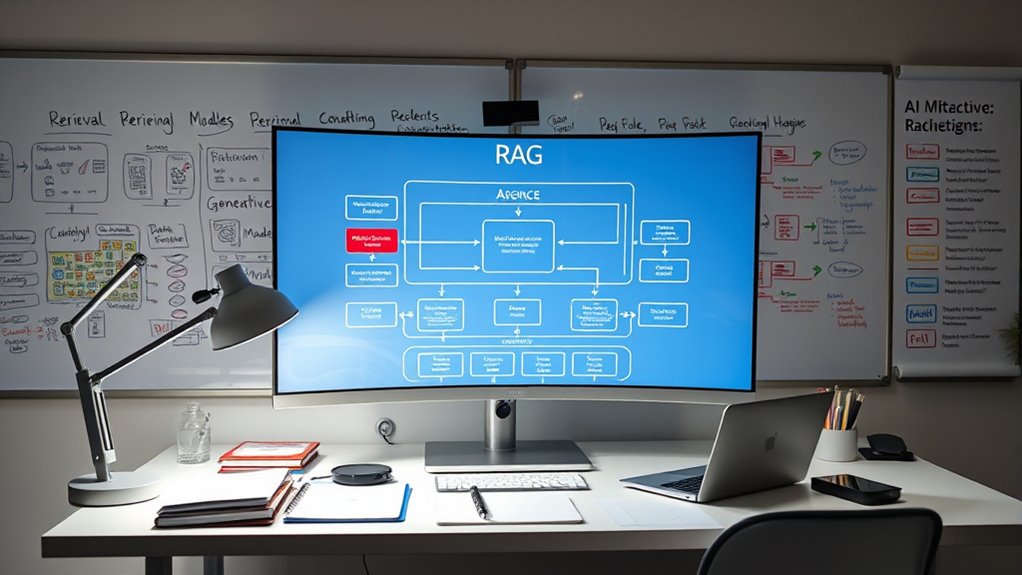

Key Components and Architecture of a RAG System

A RAG system is built around several key components that work together to deliver accurate and contextually relevant responses. First, the retrieval module fetches pertinent information using specific retrieval strategies, guaranteeing relevant data is available for the generator. Second, the knowledge integration component combines retrieved data with existing knowledge bases, providing a thorough context. Third, the generation module uses this integrated information to produce coherent responses. These components are interconnected: retrieval strategies ensure efficient data access, while knowledge integration enhances the system’s understanding. The architecture’s design enables the system to adapt dynamically to different queries, balancing retrieval accuracy with generation quality. This synergy allows RAG systems to deliver precise, context-aware outputs, making them powerful tools for complex information retrieval and natural language generation tasks.

Practical Use Cases and Benefits of RAG

By seamlessly combining retrieval and generation, RAG systems excel in a variety of practical applications, offering significant benefits across multiple industries. They enable effective knowledge integration, allowing you to generate accurate, context-aware responses by accessing relevant information from large datasets. In customer support, RAG improves chatbot accuracy and reduces response time, enhancing user experience. In healthcare, it assists doctors by providing up-to-date medical data and literature, supporting better decision-making. For research and education, RAG helps synthesize information quickly, making complex topics more accessible. Its ability to incorporate real-world applications means you can deploy RAG models for personalized recommendations, legal document analysis, and content creation. Additionally, the color accuracy of a projector plays a crucial role in delivering vivid, true-to-life images. Overall, RAG enhances efficiency and accuracy, transforming how knowledge is accessed and utilized across various sectors.

Challenges and Future Directions in RAG Development

Despite its impressive potential, developing Retrieval-Augmented Generation systems faces several significant challenges. Scalability challenges hinder the ability to process vast datasets efficiently, limiting real-time applications. Ethical considerations, such as bias and misinformation, demand careful handling to prevent harm. To address these issues, future research should focus on: 1. Enhancing algorithms to improve scalability without sacrificing accuracy. 2. Developing methods to identify and mitigate bias in retrieved data. 3. Establishing standards and frameworks for ethical deployment. Addressing issues related to Emotional Support can improve user trust and system reliability. Overcoming these hurdles will require innovation and collaboration. As you push RAG systems forward, balancing technical advancements with responsible practices will be essential for sustainable growth and trustworthy AI applications.

Frequently Asked Questions

How Does RAG Differ From Traditional Language Models?

You might wonder how RAG differs from traditional language models. RAG combines knowledge integration and contextual retrieval, allowing it to fetch relevant information from external sources dynamically. Unlike traditional models that rely solely on pre-trained data, RAG actively retrieves context during generation, making responses more accurate and up-to-date. This approach enhances understanding, providing richer, more relevant answers by integrating external knowledge seamlessly into the generation process.

What Are the Main Limitations of RAG Systems?

Think of RAG systems as a busy librarian juggling books and digital info. Their main limitations are like crowded shelves—knowledge integration becomes complex, and keeping everything synchronized is tough. Scalability challenges also emerge as data grows, slowing down retrieval and response times. You might find that, despite their power, RAG systems struggle to efficiently manage vast, evolving knowledge bases, limiting their ability to deliver accurate, timely information at scale.

Which Industries Benefit Most From RAG Implementation?

You’ll find RAG systems especially beneficial in industries like healthcare, finance, and legal sectors, where accurate, up-to-date information is essential. These systems improve decision-making and streamline data retrieval, providing sector advantages like faster responses and enhanced insights. By implementing RAG, you can optimize industry applications, reducing manual effort and increasing efficiency, making these sectors more agile and responsive to evolving information demands.

How Can RAG Improve Information Retrieval Accuracy?

Imagine opening hidden accuracy in your data retrieval. RAG enhances information retrieval by providing context enhancement, which deepens understanding, and improves query precision, making results more relevant. It actively pulls in external knowledge, narrowing down what’s truly important. This dynamic process guarantees your system fetches accurate, context-rich data every time, transforming how you access information and reducing errors—giving you confidence in every answer you receive.

What Are Emerging Trends in RAG Research and Development?

You should watch for emerging trends in RAG research, like enhanced knowledge integration and real-time updates. Developers are working on better ways to combine external knowledge with generated responses, making outputs more accurate and context-aware. Real-time updates are also gaining attention, allowing RAG systems to stay current with the latest information. These advancements aim to improve performance and guarantee responses remain relevant in fast-changing environments.

Conclusion

So, now you’re basically a RAG expert—ready to let it fetch facts while you sit back and pretend you knew all along. Just remember, while RAG might seem like a magic wand, it still struggles with accuracy and bias. But hey, who needs perfection when you can have a system that’s almost as smart as your search history? Embrace the chaos—after all, isn’t that what innovation’s all about?